状态分类:算子状态(Operatior State);键控状态(Keyed State);状态后端(StateBackends)

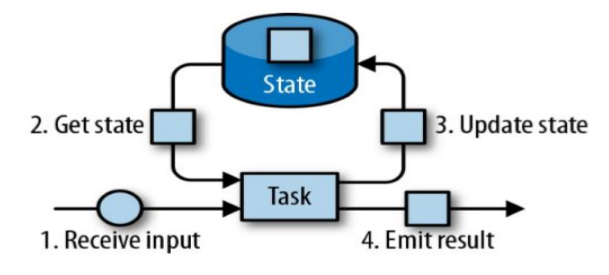

什么是状态

1:由一个任务维护,并且用来计算某个结果的所有数据,都属于这个任务的状态

2:可以认为状态就是一个本地变量,可以被任务的业务逻辑访问

3:Flink 会进行状态管理,包括状态一致性、故障处理以及高效存储和访问,以便开发人员可以专注于应用程序的逻辑

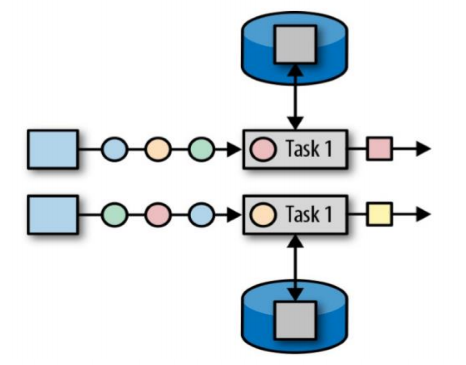

算子状态(Operator State)

1:算子状态的作用范围限定为算子任务,由同一并行任务所处理的所有数据都可以访问到相同的状态

2:状态对于同一子任务而言是共享的

3: 算子状态不能由相同或不同算子的另一个子任务访问

列表状态(List state):将状态表示为一组数据的列表

联合列表状态(Union list state) :也将状态表示为数据的列表。它与常规列表状态的区别在于,在发生故障时,或者从保存点(savepoint)启动应用程序时如何恢复

广播状态(Broadcast state) :如果一个算子有多项任务,而它的每项任务状态又都相同,那么这种特殊情况最适合应用广播状态。

class MyCountMap implements MapFunction<sensorreading, tuple2<string,integer>>, ListCheckpointed<integer> {

//定义算子状态

private Integer count = 0;

@Override

public Tuple2<string, integer> map(SensorReading value) throws Exception {

count++;

return new Tuple2<>(value.getId(),count);

}

@Override

public List<integer> snapshotState(long checkpointId, long timestamp) throws Exception {

return Collections.singletonList(count);

}

@Override

public void restoreState(List<integer> state) throws Exception {

for (Integer integer : state) {

count+=integer;

}

}

}

</integer></integer></string,></integer></sensorreading,>

键控状态(Keyed State)

1:键控状态是根据输入数据流中定义的键(key)来维护和访问的

2:Flink 为每个 key 维护一个状态实例,并将具有相同键的所有数据,都分区到

3:同一个算子任务中,这个任务会维护和处理这个 key 对应的状态

4:当任务处理一条数据时,它会自动将状态的访问范围限定为当前数据的 key

1:值状态(Value state) :将状态表示为单个的值

2:列表状态(List state) :将状态表示为一组数据的列表 3:映射状态(Map state)将状态表示为一组 Key-Value 对

4:聚合状态(Reducing state & Aggregating State) :将状态表示为一个用于聚合操作的列表

package com.atguigu.state;

import com.atguigu.bean.SensorReading;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.RichMapFunction;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.checkpoint.ListCheckpointed;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import scala.Tuple2;

import java.util.Collections;

import java.util.List;

public class KeyedState {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStreamSource<string> socketTextStream = env.socketTextStream("hadoop112", 7777);

DataStream<sensorreading> inputStream = socketTextStream.map(value -> {

String[] split = value.split(",");

return new SensorReading(split[0], new Long(split[1]), new Double(split[2]));

});

/**

* 统计每个编号的温度个数

*/

SingleOutputStreamOperator<tuple2<string,integer>> map = inputStream.keyBy("id").map(new MyCountMap2());

map.print();

env.execute();

}

}

class MyCountMap2 extends RichMapFunction<sensorreading, tuple2<string,integer>> {

//定义算子状态

private ValueState<integer> myKed ;

@Override

public void open(Configuration parameters) throws Exception {

myKed = getRuntimeContext().getState(new ValueStateDescriptor<integer>("key_count",Integer.class,0));

}

@Override

public Tuple2<string,integer> map(SensorReading value) throws Exception {

Integer count = myKed.value();

count++;

myKed.update(count);

return new Tuple2<>(value.getId(),count);

}

}

</string,integer></integer></integer></sensorreading,></tuple2<string,integer></sensorreading></string>

状态后端(State Backends)

1:每传入一条数据,有状态的算子任务都会读取和更新状态

2:由于有效的状态访问对于处理数据的低延迟至关重要,因此每个并行任务都会在本地维护其状态,以确保快速的状态访问 状态的存储、访问以及维护,由一个可插入的组件决定,这个组件就

叫做 状态后端 (state backend)

3:状态后端主要负责两件事:本地的状态管理,以及将检查点(checkpoint)状态写入远程存储

选择一个状态后端

➢ MemoryStateBackend

• 内存级的状态后端,会将键控状态作为内存中的对象进行管理,将它们存储在TaskManager 的 JVM 堆上,而将 checkpoint 存储在 JobManager 的内存中

• 特点:快速、低延迟,但不稳定

➢ FsStateBackend

• 将 checkpoint 存到远程的持久化文件系统(FileSystem)上,而对于本地状态,跟 MemoryStateBackend 一样,也会存在 TaskManager 的 JVM 堆上同时拥有内存级的本地访问速度,和更好的容错保证

➢ RocksDBStateBackend

• 将所有状态序列化后,存入本地的 RocksDB 中存储。

状态编程

同一个传感器前后温度相差10就报警输出:(sensor_1,35.8,50.0)

package com.atguigu.state;

import com.atguigu.bean.SensorReading;

import org.apache.flink.api.common.functions.RichFlatMapFunction;

import org.apache.flink.api.common.functions.RichMapFunction;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

import scala.Tuple2;

public class KeyedStateTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStreamSource<string> socketTextStream = env.socketTextStream("hadoop112", 7777);

DataStream<sensorreading> inputStream = socketTextStream.map(value -> {

String[] split = value.split(",");

return new SensorReading(split[0], new Long(split[1]), new Double(split[2]));

});

/**

* 统计每个编号的温度个数

*/

SingleOutputStreamOperator<tuple3<string,double,double>> map = inputStream.keyBy("id").flatMap(new MyFlatMap(10.0));

map.print();

env.execute();

}

}

class MyFlatMap extends RichFlatMapFunction<sensorreading,tuple3<string,double,double>> {

//当前温度跳变差值

private double temp;

public MyFlatMap(double d){

this.temp = d;

}

private ValueState<double> lastTempState = null;

@Override

public void open(Configuration parameters) throws Exception {

lastTempState = getRuntimeContext().getState(new ValueStateDescriptor<double>("last_State",Double.class));

}

@Override

public void flatMap(SensorReading value, Collector<tuple3<string, double, double>> out) throws Exception {

//获取上次的温度值

Double lastTemp = lastTempState.value();

if(lastTemp!=null){

Double diff = Math.abs(value.getTemperature()-lastTemp);

if(diff>=temp){

out.collect(new Tuple3<>(value.getId(),lastTemp,value.getTemperature()));

}

}

//更新状态

lastTempState.update(value.getTemperature());

}

@Override

public void close() throws Exception {

lastTempState.clear();

}

}

</tuple3<string,></double></double></sensorreading,tuple3<string,double,double></tuple3<string,double,double></sensorreading></string>

Original: https://blog.csdn.net/m0_57126456/article/details/121502871

Author: 天才少年137

Title: Flink——状态管理